If you guys could do a 64 bit version, which can use up to a tb of ram then that would really put you above the competition.

Let’s see. A 1TB novel. Make War and Peace look like a short story

64 bit can handle anywhere from 8GB RAM to 192 gb in windows 7, depending on version, and up to 2 TB on Windows 10 (128 gb home) While 32 bit can only handle 4 gbs on average. Ram is the instant memory, and is short for Random Access Memory. Something akin to your short term memory.

The reason I ask is I noticed that with 212 sub documents in my novel folder that it takes scrivener a moment to split at the selection. I am only just over half way through splitting my novel. By going 64 Scrivener can use the 12 gb ram in my machine and be a lot quicker than being limited to 2 gb max for 32 bit apps running under 64 bit OS.

Applications can crash when they hit the 2 gb “wall,” which actually happened to me recently when I was working on my newspaper route in scrivener. (Off topic: The drag and drop and output to doc is perfect for dynamically rearranging my route!) In a worse case scenario it can crash the entire system and kick up a blue screen of death. How many times have you been working on something and windows crashes, stating that a memory block could not be read? Sometimes that’s a memory leak, and still other times it’s the memory wall, it depends on the memory address and *.sys file displayed.

Anyway, I noticed that the other writing software out there are all 32 bit. If you guys were to go 64 bit you would leap ahead of the rest of the writer’s suites and probably gain a lot more customers who are tired of the 2 gb wall.

I haven’t got any insight about the development team’s stance on 32 vs. 64 bit, but to address your point about how slow the split-at-selection feature is… the only thing that will speed that up, I think, is a faster hard drive. If you are using a standard hard drive, then upgrading to a solid state drive would probably show a marked increase in responsiveness when splitting a very large document. As the document you’re splitting shrinks, so too will the time it takes to split.

Scrivener is doing a lot of drive access to accomplish the split: creating a new file, adding a significant amount of text to it, then removing that text from the original file, and finally saving those new/modified files from RAM to the hard drive. I really don’t think that access to more RAM would have that big an impact, unless Scrivener is trying to use more than 2 GB; it’s not like Scrivener is preventing the OS from allocating the rest of your RAM to other applications.

That explains the lag, I have been wanting to upgrade to a SSD for a long time, and just found a hack of sorts to allow for the full 4gb of ram that is available to 32 bit and it made very little difference. (Of course I backed up the exe) Knowing that it actually writes to the disk while it splits the file is quite helpful. I have a WD Black edition as my master drive, and the scrivener files are actually stored on a seagate barracuda green for one drive access, since my onedrive folder is so large with my documents and pictures inside just in case something fails.

However, it is even slow on my netbook, not quite as slow but slow. I guess I’ll finally invest in a SSD instead of a SATA drive for my OS and documents this Christmas.

you can also create a ram drive to temp storage. Make sure it is backed by real disk to prevent data loss.

We use proprietary code for this on windows servers, but a simple search on line will turn up some solutions that will give you a much cheaper way to get performance (ram is cheaper than SSD). Linux/Mac folks already have the tools so just look it up on line.

I’ve been wanting a SSD for a while, and newegg has some on sale for black November so I’ll get that and move my windows over to it. That would be more beneficial in the long run than a rather risky ram drive that’s only limited to my actual ram, even if it is backed up on a HDD.

Thank you for that option though ![]()

running a 64bit OS and 128G ram… well… more than enough as the RAM only acts as a RW cache for open file handles. We typically limit the ram disk to 2GB as any file much larger than that would be a dbf extent for oracle or sql server. Anyway … something to consider for low cost perf boost.

I also create 3d models when I am not writing, or if I need to see something to describe it, so the extra ram doesnt go to waste. I would love to get the full 32 gb that my motherboard can support. When I added 8 gigs I had a substantial performance increase in my 3d modeling programs so, for me, it’s worth it due to my other hobby to max out my ram.

for grins and giggle we loaded a 4socket UCS blade with 2TB ram and (40 cores). The SSD EMC chassis supports a stupid level of disk IO… let’s just say that for the first time ever, I didn’t feel that windows was slower than my Unix desktops (and for the record the issues with windows is the non-MS supplied components that is pretty much required these days so I’m no knocking MS for it).

But yeah… not a normal system for comparison.

I need to get one of those for my house.

lol, yeah…I thought it might be fast. I think if I get a SSD and the 32 gbs of ram that my motherboard supports then I’ll have a wicked fast machine. I just need to figure out what’s hogging my 1tb main drive, I’ve been trying to reclaim my space but havent had much luck. I’ll probably start working on moving my programs over to my other hard drives next. Then again it could be my 3d library clogging up my hard drive too.

Edit, yeah…103 GB of content, must go over to the storage drive lol. I’ve gotten a lot of Daz content over the last 6 years lol

Are you actually hitting 2gb of RAM usage in Scrivener? Even with a massive project that should be very difficult, as the software doesn’t load the entire thing into RAM at once (that would be crazy, some people store gigabytes of research). There could be a memory leak in the software that you’re repeatedly running into if it does get that high. May be worth keeping an eye on its usage with Task manager while you work, and see if any operations cause a sudden spike that is never reclaimed.

I agree with the above, disk IO speeds (especially) and raw CPU (for some operations like compile) are going to benefit a writing program more than RAM. Text, even formatted text, is just about as efficient a data type as can be.

I dont think I hit the 2 gb wall yet, despite having a 141k word into it and splitting the file up (the program did slow down drastically when I got around 150 pieces but that could just be from read/write data since it doesnt do that with smaller chunks) And it did crash to the desktop, but I think that was more of an importing file issue. I was working on my paper route and imported the original doc that my manager sent me last year instead of the google docs export of a similar name. Both my book and the route winked out of existence when I did that. I dont know what kind of doc that the paper company uses but word doesnt like it either. I can only open it in libre office. However, I have gotten as high as 1gb with scrivener, when I did split screen with the full document in one and the scene in the other but I think that was more of a multi task thing than anything.

Ioa, am I incorrect in thinking that folks like me, those who aggressively split and then use scrivening mode,would see higher RAM util? My thought is more file objects (handle, pos, read/write buffer, undo buffers, etc).

So a person with 3K words in one file could possibly use less RAM than a person with 2Kwords in 30 files.

Yes? no?

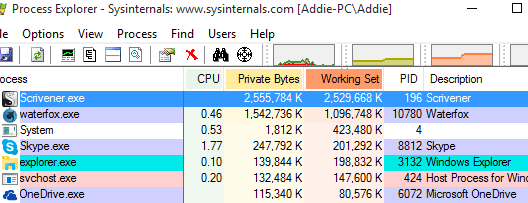

I’ve been keeping an eye on it with process explorer, and noticed that the working set is slowly growing as I chop up my novel. I alredy adusted scrivener so it can use 4 gb of ram instead of 2 but there wasnt much of a difference, so the slowdown I noted must be a read/write thing and not the ram like I first suspected. Currently I am at just shy of 190k ram with 202 documents (I removed some not needed since I discovered the chapter system)

Wanting a 64bit text editor… Interesting to say the least… O_o

I know, right? Might be over kill but its nice to know that if it ever reaches that wall that it’s not going to crash.

Speaking of going past 2gb it actually did and all I did was save the file then view the entire book so I can do a global save

And it’s still growing O.o makes me thankful I patched it go past 2 gb

Scrivenings is more of a memory issue with the Windows implementation. To get into the details of the differences a bit: on a Mac if you take 30 files and stitch them into a Scrivenings session, the software uses magic to get all of the data of the included RTF files into a single text text editor—in a sense it truly is a “single file” at least in RAM, it just has methods of extracting changes from that “file” on the fly back into the original data sources. Thus using 30 files to fill the text editor instead of 1 similarly sized file would be of insignificant difference in terms of RAM usage.

On Windows, we unfortunately do not have the necessary ingredients to build a session inside of one text editor. There is no static and safe way to say this text here falls into section #23 and after this code, #24. So what we’re doing there is building 30 text editors and stacking them in a scroll view. So there is a more direct correlation between memory usage and session size in terms of included item quantity (not words so much—more is being said with the chunk of code that makes a text editor than the words inside the text editor).

Fortunately the transition to the newer Qt version includes the necessary tools to build a more more efficient system like the Mac uses for Scrivenings, so this won’t always be a problem, it’s something to consider for now though—and it might indeed be easier to hit 2gb than I had thought off-hand. Definitely some interesting testing there.

Either way, I still think the best approach for us is to continue to optimise the software so that it doesn’t use so much memory, rather than just throw more bits at in and bandaid over the inefficient usage. That’s just my opinion though, I’m not precisely sure what the plans are for 32-bit or 64-bit in the future. I’m sure eventually it will just be what everything is, it is already decidedly headed that way on the Mac.

Since I found that little 32bit app 4 gb ram patch 4gb_patch.zip (21 KB) I’ve done it to all of my most used software (I bought scrivener yesterday btw, YAY!) on both of my machines. I’ve seen a significant improvement on the games that I play to relax. It seems like they like stretching their legs out or something.

Anyway, I was not in scrivenings mode, I just saved then started doing some global find and replaces. I looked over at processor explorer (I have3 monitors for my 3d hobby) and saw scrivener had broke the 2 gig barrier. It hovered around 2 gb until I closed it. That’s 2.41247940063477 gigabytes (I checked it here http://www.convertunits.com/from/kilobyte/to/gigabyte) so I guess something is going on in the background.

Currently I have 238 parts to my book with 5 more chapters to chop up. Once chopped up the entire book will get a good examine and overhaul because it needs to go on a word-loss diet

I’ll keep an eye on it to see how big it really gets. On start up it only has about 100k memory use but it slowly grows the more I work with my file. I’ve used Windows most of my life, I’ve toyed with ipads and stuff but it just isnt my thing. I’ve been thinking about getting a mac book but until I actually sit down and test the os to see if its for me then I’m not serious.

Edit:

Now this is weird, once I got past 260 documents (I added image folders) Scrivener dropped to 30-40k and it sped up the way it used to work before it lagged out. Quick and speedy. So it seems that once it gets enough sub documents scivener’s optimization kicks in and speeds it back up. If you could just get that same optimization working on 160-260 then it will really speed up.

Edit 2:

I am working on the second book, importing it into scrivener for easy reference. It’s a longer book that I need to really chop up and decide which scenes need to bt put aside for a new book. I glanced over at process explorer and found that Scrivener has gone up to 3 gb

Perhaps you need to include the 4 gb patch into the future release of scrivener. I dont know what would have happened had I not patched it but it worries me a little that scrivener can eat up this much memory.

At least under OS X Scrivener uses bugger all memory: ~200Mb with my current 150,000 word project (double that length with notes & dozens of references images and docs). But all those files mean, potentially, a lot of disk IO, so a slow disk (non SSD) could impact performance more than RAM.

My own call for 64bit to ensure the application can use Apple’s modern API’s. For example, Force Touch under El Capitan doesn’t work properly (discussed in length elsewhere) due to Scrivener being 32bit.