This is a really interesting article. Thanks for posting it!

Here’s the TL;DR.

The task that generative A.I. has been most successful at is lowering our expectations, both of the things we read and of ourselves when we write anything for others to read. It is a fundamentally dehumanizing technology because it treats us as less than what we are: creators and apprehenders of meaning. It reduces the amount of intention in the world.

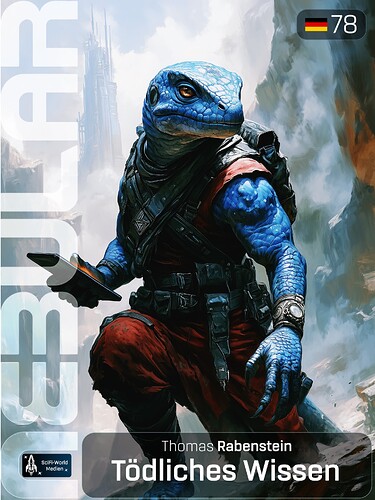

I fundamentally disagree, because AI not only gives us new and improved options for the early detection of various cancers in X-rays, but also significantly improves the evaluation of large amounts of data (weather simulations, vaccine analyses, etc.). In particular, generative imaging AI finally allows me, as an author, to create visualizations for my novels (cover images, illustrations) that are more in line with my imagination. AI helps with translations, spell- and grammar-checking, and can support authors in many ways to improve their own workflow. I don’t see any dehumanization here, but a new era of useful tools that help us focus on the creative part of the work.

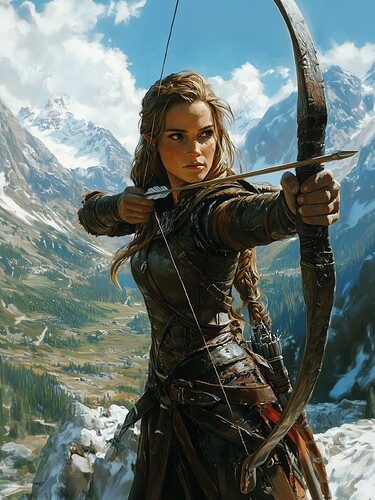

PS: An example of an interior illustration created with MidJourney.

Here come the pseudo-scientific statements to drive us numb with so-called facts by renounced institutions and celebrity know it alls, who probably couldn’t tell you how to make up their own bed in the morning.

No, just. No. There is a world of difference in AI acting as a research assistant—something it can actually do both well and what is needed.

But those same things you mentioned? The imaging stuff also generates false positives and can get weird over time. Humans have to double check the work big time. Now, that said, working in tandem with AI, radiologists do have greater capability and accuracy—potentially. It hasn’t been proven over a large scale at this point.

What was the prompt that generated this image?

How is that the same level of talent and dedication that it would take to create this for real? In oil paints? In procreate?

It’s better than that, the creators of these LLMs don’t even really know how they work themselves.

But are your “facts” the truth and your views the true answer to this discussion? My friend, I see a huge amount of “bias” in your answer and therefore find it hard to take it seriously.

Right, definitely no bias in conflating medical and scientific research potentiality and extending that to human creativity. Definitely not at all.

I have re-read my previous answer and I cannot find any sentence in which I equate prompt design with artistic talent.

However, after more than a year of familiarizing myself with the topic and reading a lot of nonsense from so-called artists about image-generating AI, I have to say that it does take a certain amount of talent and knowledge to have the AI create an illustration based on your own ideas that also stands up to critical scrutiny.

At least my experience says that my previous collaboration with artists and illustrators was not always satisfactory in terms of the realization of my ideas and the quality of the result.

To elaborate on this topic a little, I don’t know of any cover illustrators who still sit down and create a cover as an oil painting. That would be too time-consuming and unaffordable. Instead, most “artists” use stock photos and create image collages using Photoshop techniques. This is why there are many recurring image motifs on covers. Is this art, or is it criticism?

I myself do not claim for a moment that I am an “artist” or have the talent to draw photorealistic images, but I come to rendering (Blender) from 3D art to AI imaging and combine the two. This is how unique cover images are created that exactly match my vision. As an author, that’s exactly what I want.

Sorry if I don’t post the prompt here, because finding the right one took me several hours.

Some people might think that if you write three or four words together, you get the perfect picture. I can say in conclusion that this is not how the process of creation works.

Well, I do have a certain bias because I am a cancer patient and I have discussed this with my attending physician. My hospital is running a trial/pilot project that assesses the growth of metastases over several CTs, for example. But to put your mind at rest, the final arbiter is still the human being, which is why I also see imaging AI as a tool and not as a final opponent. Artists should perhaps consider the fact that they will eventually make themselves redundant if they refuse to integrate the new tools into their creative process, just as coachmen once did when the car came along. Wishing away AI technology will no longer work.

The article I linked discusses this question. If writing a prompt to generate the image you want takes hours, then yes, you have used the AI as a tool to realize your own creative vision. Not as a substitute for it.

(OTOH, your archer is unlikely to actually hit anything. Her draw is coming to the wrong place.)

Well, she’s a fantasy woman of a fantasy story. Women with pointed ears don’t exist either, so maybe she does hit the target after all. ![]()

![]()

![]()

Well, all that to say it isn’t actually art. Even combining those two effects. Once you use Midjourney, it can’t be replicated in a reliable fashion–even with the same prompt. It also can’t be trademarked or copyrighted. I can reuse any AI art for any purpose anytime I want.

But it is not the process of creation. What you are describing is the process of recreating what has already been created by someone else (not compensated) and then mixed with someone else’s creations (also not compensated).

“Prompt engineering” is not anything real. There is no real knowledge or skill there.

Hahaha. What a statement! This sums up the pointlessness of the entire exercise. Because none of this is truly real in nature by skilled professionals, nothing can be meaningfully changed. If it could, it would render the AI as unneeded.

Similar to people who think that AI code means programmers aren’t needed any more. Dude, code is the easy part. The hard part is getting the proper understanding of the datasets, the existing environment, and most of all, the actual requirements.

And you can absolutely tell. Just not good.

I am not sure I agree. Or, even if slightly creative, it isn’t meaningfully creative. If you can’t make adjustments, and it can’t be assigned trademark or copyright, then all it is is someone using a bunch of other artists’ works as some sort of hodgepodge of a creation at best. At worst it is a pointlessly derivative pseudowork.

Either way it isn’t going to stand the test of time. It will be added to a maelstrom of AI generated art that will reduce the little meaning may have been there.

Exactly why it is critical that AI “slows its roll”. But again, you are talking about two unrelated subjects here. Most people, including me, would agree with the role of AI as a research assistant to professionals. Like, in this situation, it can assist radiologists, and when wrong, a human can correct.

Now, again, if we are talking SLMs or generative fills or other minor AI “techniques”, then artists are already using those to create better works that are, in fact, allowed to be copyrighted.

But this is no car instead of stagecoach situation. It’s just not. I am in this field.

I disagree with your statement. Do you actually know what you’re talking about? I’m not talking about one-liners for funny cat pictures here.