A similar use case would be if someone wanted to make a single volume out of several Gutenberg Project non-fiction texts and were to use Scrivener to stitch them together.

I suppose, but I would not use Scrivener for that job, it’s quite unsuitable and overkill, if that’s all you’re looking to get out of it. Scrivener does many great things, but stitching together files is not unique, nor is it efficient at doing it. The operating system itself, along with core system tools, is capable of concatenating files together at a level of optimisation that no software will be able to approach:

cat book1.md >> master_file.md

cat book2.md >> master_file.md (etc.)

Now what the compiler is great at doing is fundamentally and even radically changing the contents of “book1.md” on the fly, like inserting deep heading document structure based on an imposed binder outline structure, or injecting syntax around objects such as image sources, etc. If you aren’t actually using any of that though and are just using it as a stitching tool, it’s not great.

That said, if we’re talking about Markdown, phsyical stitching (even to a temp file) is often not going to be necessary anyway, since both Pandoc and MultiMarkdown can take multiple .md files as input, and stitch them together into a single output file.

pandoc -t epub -o multi_volume_book.epub current-project-*.md

The asterisk here means include all .md files starting with ‘current-project-’. So we get all of ‘current-project-01.md’ to ‘current-project-15.md’ used to create the .epub.

While Pandoc doesn’t support it directly, MultiMarkdown also supports what it refers to as “transclusion”, which is identical in theory to the \input command in LaTeX (or the <$input> placeholder in Scrivener for that matter), which as you know is a great way to keep files shorter than mammoth, and as well to keep the scope of the current .tex file relevant rather than branching off into configuration details or what have you.

At this point I might try just compiling a few files at a time and see what happens…

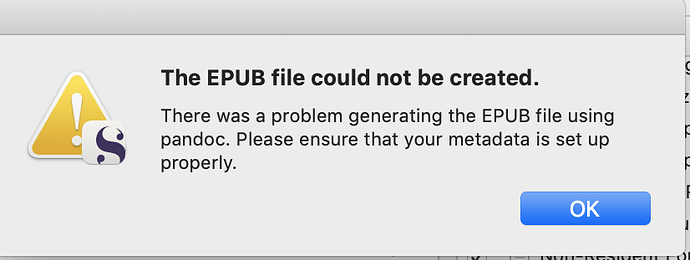

But you said it compiled a complete .md file successfully right? There isn’t anything much further to learn from Scrivener (there is one thing, but we’ll get to that). The next step in the diagnostic chain is to see if Pandoc can convert this .md file to .epub in one go. How that test goes determines what to test next.

I will either add hashmarks via a ctrl+F (will take a while) or try to find someone to write an awk script that will distinguish between hashmarks at the beginning of the line and anywhere else.

That is not a complex regular expression if that is all you need.

^Chapter

You will want to have real headings in your books at some point, so that wouldn’t be wasted time. What you’re producing right now may likely crash ebook readers or make them run very slow, anyway. It may also be the source of the problem, but we don’t know that yet, so I didn’t want to suggest fixing it until other easier to solve issues were ruled out.

In short though, the internal .xhtml files of an .epub are designed to be roughly chapter-length. From what you suggest in your description here, you’ve got whole “books” in these. Small ones, to be fair, but still well beyond what anyone would reasonably call a “chapter” for some of them.